Apparently Apple will introduce an “iWatch” tomorrow. What has been seen so far does not convince me and since I have been playing with some concepts in my imagination for a long time, I have adapted this short hand to a SmartWatch in 2034.

You don’t need bulky computers anymore, instead a wafer-thin wristband is enough. Since in the future all elements like chips, sensors, tracks, display and solar cells can be easily printed on a carrier material like a foil, cloth or paper etc. (source), it is no problem at that time to create extremely powerful “hardware” for all possible applications. The body serves both as a storage medium (hard disk, cloud) and as a conductor path and additional energy source. The body’s own sensory abilities can be queried and processed by the wristband if desired. For example, if I touch a cup, I can see on the display how hot my coffee is.

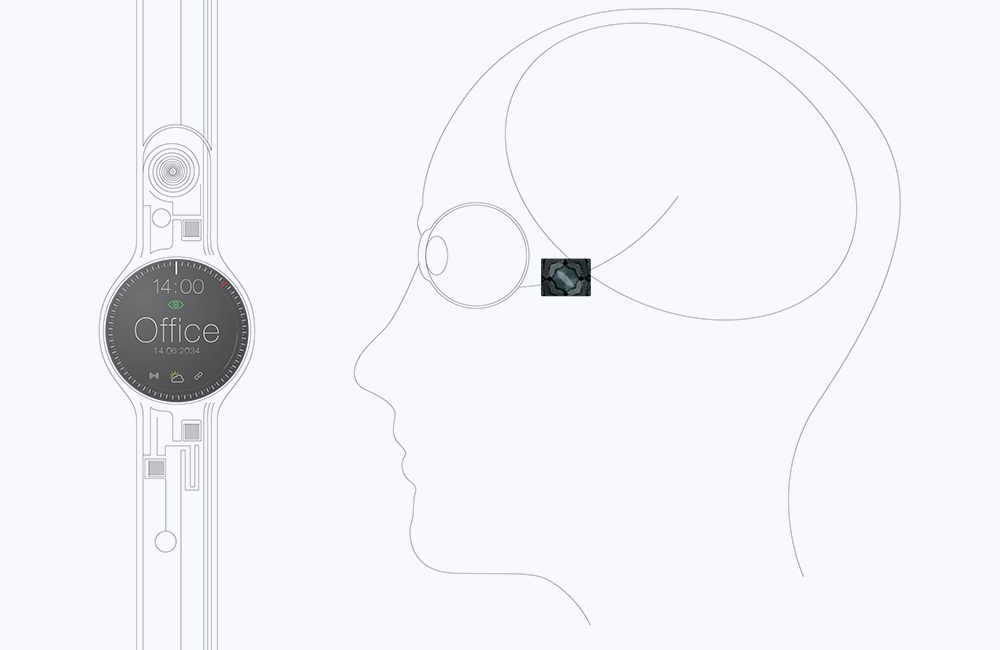

The visual output medium can be the wristband’s printed display. But you can also stick a chip on your temple, through which the information exchange with the eye or the brain takes place (source). Pictures, information or the work are then displayed on top of it at generally specified locations (the advertising will annoy), similar to a head-up display. The whole thing can of course “still” be mechanically deactivated.

So how would my office be equipped in 2034? Today, my monitor is still very present on the desk, but in 20 years’ time it would of course no longer be there. In its place, a virtual display would be visible to my eyes, which, of course, would also be visible to other people via approvals at the place where I would see it. In other words: an “augmented reality” that is endogenous to the body. The output via the optic nerve can also function as input, everything you see and interact with what you see can be processed.

If I transfer this to an automotive example, it could look like this

I reach for the door of my car, it recognizes me by my signature (which can only be decoded for certified objects), which is transmitted from my wristband via my body to my hand to the handle. I can open the door and see the necessary information in the usual places, which I can see virtually faded in with my eyes. If a passenger is sitting next to me, he or she will be recognized by the car’s system, which first requests a release. Only then can he or she have access to the infotainment functions relevant to him or her. On my steering wheel (yes, that still exists!) I see virtual interaction elements. Extensive input such as search or target input is done via the speech function, which now functions like a normal dialogue (ten years later, cognitive input/control will be possible via the optic nerve chip – source).

A sensor in the wristband, which records body temperature, controls the air conditioning system. The music library, which is stored in my little finger, streams via radio from the wristband to the head unit and thus to the output media the desired tracks.

My address book is accessible from my thumb and is forwarded to the navigation software in the wristband.

The wristband recognizes dangerous situations and can react faster than the brain. It sends an impulse to the brakes and the steering wheel, thus ensuring much more safety. There will be a pre-defined distance for all vehicles, which avoids traffic jams and gives correspondingly predictive speed recommendations depending on energy consumption requirements.

And so on and so on …